The best way to understand the performance of a program is to run it with a profiler attached, but that isn't always possible. Maybe the program isn't written yet, and you want to investigate the performance of a possible design before committing to a full implementation. Or maybe you are curious to learn more about the performance implications of specific language or runtime features. For instance Pete wants to know whether it is faster to check for an object being null, or to just go ahead and call a method on it, then catch the resulting NullReferenceException.

Sorry Pete: I'm not going to answer that specifically. But I am going to explain how you can find out for yourself.

A microbenchmark is when you take a small piece of code, run it in isolation, and time how long it takes. Simple, right?

But benchmarks are tricksy things. Due to the fundamental interconnectedness of all things, when you run a piece of code outside its normal context, that tends to alter how long it takes. This can be a good thing if you are engaged in a forum flamefest and looking for data to back up an argument (you can prove anything with a benchmark, if you are clever about how you set it up :-) but not so desirable when you want to learn facts that apply to the real world!

To demonstrate some issues to watch out for, I created a new Windows Console Application project and wrote a simple benchmark that times how long it takes to sum all the integers from zero to a million:

using System; using System.Diagnostics; class Program { static void Main(string[] args) { Stopwatch timer = Stopwatch.StartNew(); int sum = 0; for (int i = 0; i < 1000000; i++) { sum += i; } TimeSpan elapsed = timer.Elapsed; Console.WriteLine(elapsed); Console.WriteLine("Press any key to continue"); Console.ReadKey(); } }

I ran this three times in a row, by selecting the Release mode project configuration, then pressing F5 in Visual Studio. My 3 ghz Intel Core 2 Duo gave the following timings:

00:00:00.0027847

00:00:00.0034728

00:00:00.0020457

The worrying thing is that I got quite different results each time. Which one to believe?

This is a common problem if a benchmark executes too quickly. When measuring something very small, even tiny imprecisions in the clock source can dramatically throw off the results, not to mention if Outlook happens to wake up and check for new email in the middle of the test.

The best way to get stable results is to repeat the test many times and average the results:

using System; using System.Diagnostics; using System.Collections.Generic; using System.Linq; class Program { static void Main(string[] args) { const int passCount = 100; List<double> elapsed = new List<double>(); for (int pass = 0; pass < passCount; pass++) { Stopwatch timer = Stopwatch.StartNew(); int sum = 0; for (int i = 0; i < 1000000; i++) { sum += i; } elapsed.Add(timer.Elapsed.TotalSeconds); } double average = elapsed.Average(); double variance = (from e in elapsed select Math.Pow(average – e, 2)).Average(); double deviation = Math.Sqrt(variance); Console.WriteLine("Average time: {0}", average); Console.WriteLine("Standard deviation: {0}", deviation); Console.WriteLine("Press any key to continue"); Console.ReadKey(); } }

For bonus points, this code also computes the standard deviation of the time measurements, which provides a useful sanity check. In theory, each pass should take a similar amount of time, in which case the standard deviation will be much smaller than the average. If the deviation is too wide, that means something funky is going on, in which case I must investigate further before I can trust the results. But in this case all looks good:

Average time: 0.002947593

Standard deviation: 0.000146231002359281

Press any key to continue

But...

These results are meaningless, because I was pressing F5 to run my test, and the CLR disables JIT optimization when a debugger is attached. To run with optimization I must use Ctrl+F5, which gives an average of 0.000356319. That's an 8x difference just from holding down a modifier key!

But...

These results are still meaningless, because my test goes to all the trouble to loop over a million numbers and add them up, but doesn't actually do anything with the resulting value. A smart compiler or JIT optimizer might realize this value is unnecessary, and remove the computations that generate it. An even smarter optimizer might then realize the for loop is now empty, and remove it entirely, in which case I wouldn't actually be timing anything at all!

Benchmarks must take care to use all the data they compute, so the optimizer cannot remove the code that computes it. The easiest way is to print results to the screen (which obviously needs to be done outside the timer block, because we don't want to include I/O calls in our timing results). Add the line shown in bold immediately before the closing brace of the outer pass loop:

elapsed.Add(timer.Elapsed.TotalSeconds);

Console.WriteLine(sum);

}

Now I get an average of 0.000588847, which proves the optimizer was indeed removing at least some part of my unused calculations. But if I run this latest version using F5 rather than Ctrl+F5, I get the same 0.0029 time as before, which proves that when the debugger is attached, the optimizer does not remove the calculations regardless of whether their result is used.

But this is still not the end of the article...

This last gotcha has been the ruin of many a young benchmarker. Let's change our test code to sum the contents of an array, so it will be touching lots of memory:

int[] testData = new int[1000000]; for (int i = 0; i < testData.Length; i++) testData[i] = i; for (int pass = 0; pass < passCount; pass++) { Stopwatch timer = Stopwatch.StartNew(); int sum = 0; foreach (int value in testData) { sum += value; } elapsed.Add(timer.Elapsed.TotalSeconds); Console.WriteLine(sum); }

This gives an average of 0.000716806, which is only marginally slower than before.

Now try this version:

int[] testData = new int[1000000]; for (int i = 0; i < testData.Length; i++) testData[i] = i; const int cacheWipeSize = 16 * 1024 * 1024; byte[] cacheWipeBuffer = new byte[cacheWipeSize]; for (int pass = 0; pass < passCount; pass++) { for (int i = 0; i < cacheWipeSize; i++) { cacheWipeBuffer[i]++; } Stopwatch timer = Stopwatch.StartNew(); int sum = 0; foreach (int value in testData) { sum += value; } elapsed.Add(timer.Elapsed.TotalSeconds); Console.WriteLine(sum); }

This gives an average of 0.0015416479.

At which point the attentive reader may well be thinking, "wtf? Our test just got 2x slower, but the new code comes before the Stopwatch.StartNew call! How can that possibly be?"

This demonstrates the impact of memory bandwidth and caching on software performance. By touching a large amount of unrelated memory before each pass, we make sure the testData array will be evicted from cache, so we are now timing main memory as opposed to L2 cache lookups.

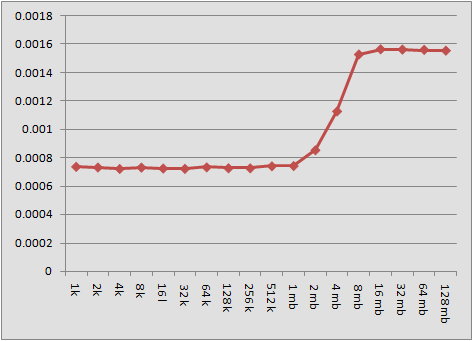

Changing the cacheWipeSize constant produces the following timing results:

Anyone care to guess how big L2 cache is on my machine?

Memory access and caching is one of the hardest things to benchmark, because it is incredibly sensitive to context. Most small benchmarks (especially if repeated many times in a loop) will encounter warm cache lines, which is not a good representation of how the same code will perform inside a larger application. If you only ever measure with warm caches, benchmarks will prefer algorithms that use low numbers of CPU instructions, even if they are less cache efficient, over more cache efficient algorithms that require more CPU instructions. But in the real world, where cache lines are often cold, the latter algorithms may be more efficient.

If a benchmark touches memory, you should try it with both warm and cold caches, using a cache wipe buffer as shown above. Comparing these two results gives a more detailed picture than either one alone, but this is still only an approximation of how things will behave in your actual program. Cache performance also depends on the size of the data set (how big is the array being summed?), on what other code runs between each call to the routine in question (is it called many times in rapid succession, or do other memory hungry algorithms intervene?), and on what other CPU cores may be contending for cache at the same time.

And that's just the data cache. Don't forget there is also an instruction cache and usually a branch prediction cache...