Many people have a mental model of how computers work that goes something like:

"A computer is like a small cardboard box. Inside this box lives an elf. The elf obeys instructions from my program, in the order they are given. Some instructions tell the elf to draw pictures onto the screen."

Functionally, this description is correct. If computers really did work that way, they would behave exactly the same as they do today, and could run the same programs.

This description is not enough to understand graphics performance, however. It oversimplifies to the point of uselessness. Here is a more accurate model:

"A computer is like a cardboard box inhabited by a pair of elves, Charles Pitchwife Underhill plus his younger sibling George Pekkala Underhill (both more commonly known by their initials).

Charles is smart, well educated, and fluent in dozens of languages (C, C++, C#, and Python, to name a few).

George, on the other hand, is an autistic savant. He finds it difficult to communicate with anyone other than his brother Charles, prefers to plan his day well in advance, and gets flustered if asked to change activities with insufficient warning. He has an amazing ability to multiply floating point numbers, especially enjoying computations that involve vectors and matrices.

When you run a program on this computer, Charles reads it and does whatever it says. Any time he encounters a graphics drawing instruction, he notes that down on a piece of paper. At some later point (when the paper fills up, or if he sees a Present instruction) he translates the entire paper from the original language into a secret code which only he and George can understand, then hands these translated instructions to his brother, who carries them out."

This second mental model explains performance characteristics that might otherwise seem rather odd. For instance if we use a CPU profiler to see what Charles is doing, then draw a model containing 1,000,000 triangles, we will notice the CPU takes hardly any time to process this draw call. How can that be? Is it really so cheap to draw a million triangles?

The explanation is that Charles didn't actually do any work when he got this instruction, merely jotting it down on his "instructions for George" list. You can write down an instruction which says "draw a million triangles" just as quickly as one saying "draw 3 triangles", although these will seem quite different to George when he later gets around to processing them.

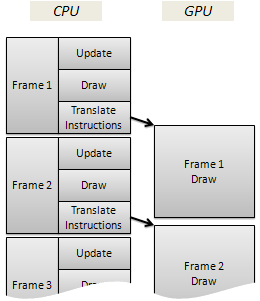

This parallel nature of CPU and GPU is tremendously important when it comes to understanding how they perform. In a perfectly tuned game, both processors should be running flat out doing useful work, something like this (time is along the vertical axis, increasing down the page):

Notice how the GPU doesn't start processing the draw instructions for frame 1 until after the CPU is entirely finished with that frame, and the CPU is busy with frame 2 at the same time as the GPU is drawing the previous frame.

In the real world, it is hard to make your CPU and GPU workload exactly equal. If you have more CPU than GPU work, the timeline looks like this:

Notice how the GPU is sometimes idle, after it has finished the work from the previous frame but not yet received any instructions for the next.

This is called being "CPU bound". The interesting thing about this situation is that if we optimized our code to reduce the amount of GPU work, that would make absolutely no difference to anything. The GPU would be spending less time drawing and more time idle, but the CPU would still be running flat out, so our final framerate would stay the same. Even more interesting, if we can find a way to do more things on the GPU without also costing any extra CPU, we could add more graphical effects entirely for free!

The other way around, look at what happens if you have more GPU work than CPU work:

In this situation the CPU has finished with frame 2, and is ready to hand it over to the GPU, but the GPU says "hey, wait a moment! I'm not ready for that yet; I'm still busy drawing the first frame". So the CPU has to sit around doing nothing until the GPU is ready to receive new instructions.

This is called being "GPU bound". In this situation, optimizing our CPU code will make no difference, because the GPU is the limiting factor on our final framerate. We could add more CPU work "for free", replacing that idle time with useful game processing.

In order to optimize successfully, you must understand whether your game is CPU bound or GPU bound. If you don't know this, you might waste time optimizing for the wrong processor. But once you do know it, you can improve your game by adding more effects to whichever processor is currently sitting idle.

So how do you tell which is which?

People sometimes oversimplify this to something along the lines of "graphics calls run on the GPU, while physics and gameplay logic runs on the CPU", or even "my Update method runs on the CPU, while Draw runs on the GPU".

Not so! Don't forget that Charles must translate your drawing instructions into a format which George can understand. If there are many instructions, it will take him a long time to translate them all.

If you have a small number of instructions which draw a large amount of stuff, this will be quick for Charles to translate but slower for George to draw. But if you have many instructions that each only draw a small amount of stuff (for instance a million draw calls with a single triangle per call, or many changes to settings such as renderstates or effect parameters), this may end up taking longer for Charles to translate.

Even if your program contains nothing but drawing instructions, with no update logic at all, it is still possible that it might require more CPU time than it does GPU time, depending on how much translation and coordinating work is required.

To figure out whether we are CPU or GPU bound, we must call in Sherlock Holmes. Stay tuned...